Evaluation of Science Funding Summary

About the Programs

The Ecosystem and Oceans Sciences sector has 16 funding programs where internal scientists can apply for O&M budgets to conduct research on a variety of topics that support the department’s objectives. The funds are allocated through competitive, targeted competitive or directed calls for proposals and exist alongside other Ecosystem and Oceans Science core research programs. In 2017-18, the total actual O&M expenditures for the programs were estimated to be $15,789,178.

About the evaluation

The evaluation was conducted from April 2018 to February 2019. It assessed the efficiency of the 16 science funding programs and Gender-based Analysis Plus was used as an analytical tool to examine whether any groups are under-represented paying particular attention to the number of female scientists applying for and receiving funding. Evidence was gathered from interviews with 46 internal and external individuals, a document review, process mapping, administrative data analysis, three surveys (applicants, review committee members and end-users of research) and a literature review.

Key findings

Efficiency of the funding cycle

Good practices exist within each of the funding programs and at the various stages of the funding cycle; however, collectively the programs are not implemented in a consistent manner leading to overall inefficiencies. There is widespread support across the Ecosystems and Oceans Science sector for changes that would improve the efficiency of how the programs are delivered.

An internal review of the competitive funding programs conducted in 2018 generated many suggestions for improvements. Respondents to both the applicants survey and the survey of review committee members were asked to rank ideas from the review. Results of the surveys are presented below. As shown, there was strong support for improvements that standardize tools and processes.

The top ranked activities that would address inefficiencies in the application process (n=261) were:

68% said “Create a common calendar for call for proposals and review committee shared across each of the funding programs.”

65% said “Develop standardized application tools and templates across the O&M funding programs.”

The top ranked activities that would improve oversight and administration (n=251) were:

71% said “Establish clearer criteria for the type of funding approach being used to distribute the O&M research budgets (e.g. competitive or directed).”

63% said “Develop a mechanism to rank the priorities across programs.”

As ranked by respondents to the survey of review committee members only, the top activities that would address inefficiencies in the review process (n=52)* were:

75% said “Develop a consistent approach to review proposals across the funding programs for the committees.”

56% said “Develop a standardized scoring approach used by regional and national review committees.”

The top ranked activities that would improve communication (n=254) were:

74% said “Create an annual call for research proposals that is circulated to all DFO science staff.”

69% said “Develop a template for fund information, including information on the processes, fund description, overall objectives/goals.”

There is support for the use of both competitive and directed models for the solicitation of proposals. Eligible applicants, however, want more communication about the rationale behind using one model over another.

Satisfaction of clients of the programs

Internal Fisheries and Oceans Canada clients who use the research produced through the programs expressed low levels of satisfaction regarding how they are consulted in priority-setting exercises noting that priorities are not reflected in the projects that are funded or the final research results. They were also dissatisfied with the way in which final research results are communicated to them; some informants noted they do not always get results back. End-users commented that to address both these issues, there are opportunities to improve engagement at key points in the funding cycle.

The following are responses to the survey of end-users:

48% are not satisfied/partly satisfied with the degree to which they are consulted in the priority-setting exercises compared to 6% who are more than/very satisfied.

49% are not satisfied /partly satisfied with the way in which research results are communicated to them compared to 11% who are more than/very satisfied.

As shown in the figure below, there are opportunities for improved client engagement at various stages in the funding cycle.

Description

This figure is a diagram of the Ecosystem and Oceans Science funding cycle. The process flows through the following stages: priority-setting, call for proposals, the review of proposals and approval of projects, the transfer of funding, research, and finally reporting. This figure also shows that there are opportunities to improve client engagement when setting priorities, reviewing proposals and approving projects, and at the reporting stage.

Level of effort associated with funding programs

Respondents to the survey of researchers/scientists (n=173) reported a total of 4,374 days in 2017-18 dedicated to administration, developing proposals and reporting for the funding programs. When the total number of days reported by respondents to the applicants survey is converted to salary dollars, the value of researchers’ time is estimated to range between $1.4M to $1.9M for the 2017-18 fiscal year. Respondents to the review committee survey (n=53) reported a total of 518 days in 2017-18 dedicated to assessing letters of intent, applications or other aspects of the funding programs.

Gender-Based Analysis Plus is an analytical tool that was used to examine the success rate of female scientists applying for and receiving funding. Additional identity factors were explored to see if there were any barriers or challenges for certain groups of scientists accessing the funds. The findings of these analyses are presented below.

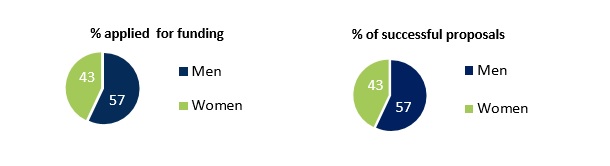

Success rate of female researchers

For a sample of 524 projects matched to administrative data, analysis showed that although more males applied to the funding programs, and the proportion of women that applied and the proportion that were successful were equal.

Description

This is a pie chart showing that 43 percent of women applied and 57 percent of men applied to the funding programs. Forty-three percent of successful proposals went to women and 57 percent were given to men.

In total, male scientists received $43,081,519 and female applicants received $27,092,847. On average, successful female applicants received 85 cents for every dollar received by their male counterparts.

Perceived barriers

There are few perceived barriers related to gender, official language, ethnicity, age and geographic location. There is a perception that career status can be a barrier to accessing funds. To help reduce any perceived barriers, best practices could be implemented and staff roles and responsibilities could be clarified as they pertain to supporting equity and diversity in the allocation of the funds.

Impact of communication practices

The piecemeal approach to communicating about funding opportunities could represent the greatest barrier to equitable access to the 16 funding programs. One third of respondents to the applicant survey indicated that the process to make them aware of calls for proposals and funds available is not transparent (33% not at all or to limited extent).

Description

This stacked bar graph shows that 36% of respondents to the applicant survey strongly or somewhat agree that “all eligible applicants have equal access to the research funds available through these funding programs.”

45% of respondents to the reviewer survey strongly or somewhat agree that “all eligible applicants have equal access to the research funds available through these funding programs.”

13% of respondents to the applicant survey neither agree nor disagree that “all eligible applicants have equal access to the research funds available through these funding programs.” 10% of respondents to the reviewer survey neither agree nor disagree that “all eligible applicants have equal access to the research funds available through these funding programs.”

25% of respondents to the applicant survey strongly or somewhat disagree that “all eligible applicants have equal access to the research funds available through these funding programs.” 27% of respondents to the reviewer survey strongly or somewhat disagree that “all eligible applicants have equal access to the research funds available through these funding programs.”

26% of respondents to the applicant survey don’t know. 18% of respondents to the reviewer survey don’t know

Recommendations

- It is recommended that the Assistant Deputy Minister, Ecosystem and Oceans Science transform the overall research funding allocation process. Consideration should be given to streamlining and developing a model aimed at increasing overall efficiencies across EOS’ research universe.

- It is recommended that the Assistant Deputy Minister, Ecosystem and Oceans Science adjust the funding allocation and research processes to increase client engagement at key touchpoints. Improved engagement with end-users should help better align research projects with their needs. It will also allow for information and research progress to be communicated to clients at key points in the process, including at the end when research results are available.

- It is recommended that the Assistant Deputy Minister, Ecosystem and Oceans Science standardize communication about funding opportunities in the sector across the funding programs to reduce real and/or perceived inequities in how eligible scientists receive information. While there is wide support for the use of both directed and competitive solicitation of research proposals, better communication about the rationale of choosing one funding model over the other, including inviting a particular group of scientists over others, would improve overall transparency.

- Date modified: